5.4 Audio

How can neural networks process audio?

Voice assistants can be controlled through spoken language. Since every person speaks differently and uses emphasis differently, neural networks are ideally suited to be used for such purposes.

As in the previous example, the main task here is to convert the verbal information into numbers that can be processed by a neural network. The following is a very simple example of how this can be achieved. There are, of course, other and more complex methods.

Audio files as images

In the field of image processing, we have become familiar with convolutional neural networks. Such neural networks with many upstream trainable filters are very good at recognising structures and features in images – regardless of where they are located within the image. This is precisely the advantage of using such neural networks for audio file processing. Two voice commands, such as “no” spoken by different people, do not have to start and end at exactly the same millisecond to be processed. Instead, only their structure needs to be identical for a neural network to recognise them as similar or classify them into the same category.

To turn the audio signals into images, a simple approach is to create a so-called spectrogram of each audio signal. You can easily create such a spectrogram yourself by recording an audio track with the free Audacity software and converting it into a spectrogram using the corresponding switch in the track (see Audacity help). While a regular audio track (i.e. the so-called waveform) shows the loudness (amplitude) over time, a spectrogram also displays the frequency over time.

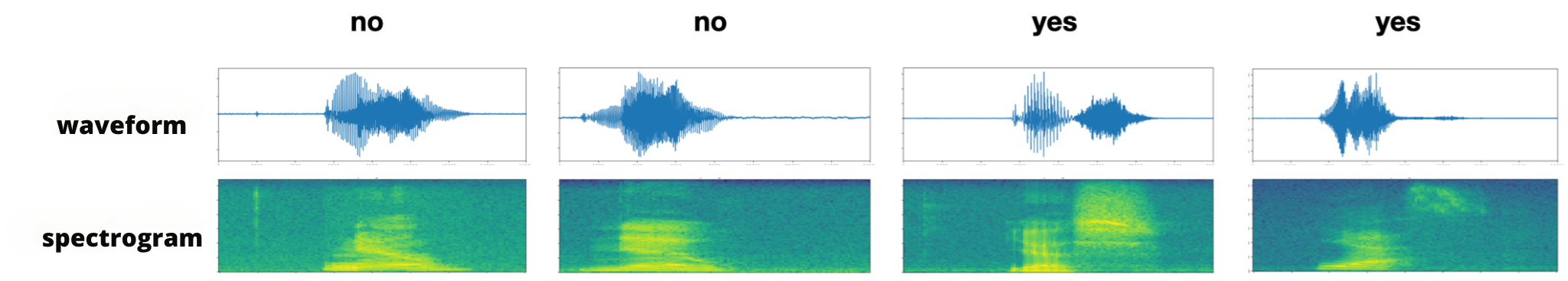

An “o” and “i” spoken at the same loudness would therefore look very similar in the waveform because the information about the pitch (frequency) is missing. The spectrogram, on the other hand, makes this visible, as can be seen in the example data below, where two instances of the word “no” and two instances of the word “yes” are shown.

Waveform and spectrogram of the words no and yes

It can be seen that a convolutional neural network would generally have no problem correctly classifying images (i.e. spectrograms) of audio signals corresponding to the words yes and no. After all, the spectrograms within the same class are more similar to each other than between different classes. And indeed, such a classification works very well in real life (up to a certain point).

Share this page